Backpropagation is a fundamental concept in the field of deep learning and artificial intelligence. It is the essential algorithm that enables neural networks to learn from data and enhance their predictions as time progresses. While the term may sound complex, the concept behind backpropagation is both logical and powerful.

Take the next step in mastering backpropagation and other core concepts of AI. Enroll in an Artificial Intelligence Course in Trivandrum at FITA Academy and gain hands-on experience with expert-led training that will help you build intelligent systems from the ground up. It lays the foundation for building intelligent systems that can adapt, improve, and make better decisions based on data.

In this blog, we’ll explore what backpropagation is, how it works, and why it plays such an important role in training neural networks.

Understanding the Basics of Neural Networks

To understand backpropagation, it’s helpful to first understand how neural networks work. A neural network is a model in machine learning that aims to imitate how the human brain handles information. It is made up of layers of linked nodes, commonly referred to as neurons.

Each neuron receives input, applies a mathematical operation, and passes the result to the next layer. During this process, the network makes a prediction based on the inputs it receives. But in order for the network to make accurate predictions, it must first be trained, and that’s where backpropagation comes in.

The Role of Backpropagation in Learning

Backpropagation, short for “backward propagation of errors,” is the method used to adjust the weights of the connections between neurons. These weights determine how strongly each input influences the final output. When a neural network makes a prediction, the result is compared to the correct output. The difference between the predicted and actual result is called the error.

Backpropagation helps reduce this error by working backwards through the network. It calculates how much each weight contributed to the error and then updates those weights to improve the prediction next time. In simple terms, it teaches the network how to correct its mistakes. Master the concept of backpropagation and learn how neural networks adapt through error correction by enrolling in an Artificial Intelligence Course in Kochi, where you’ll gain hands-on experience and expert guidance.

How Backpropagation Works

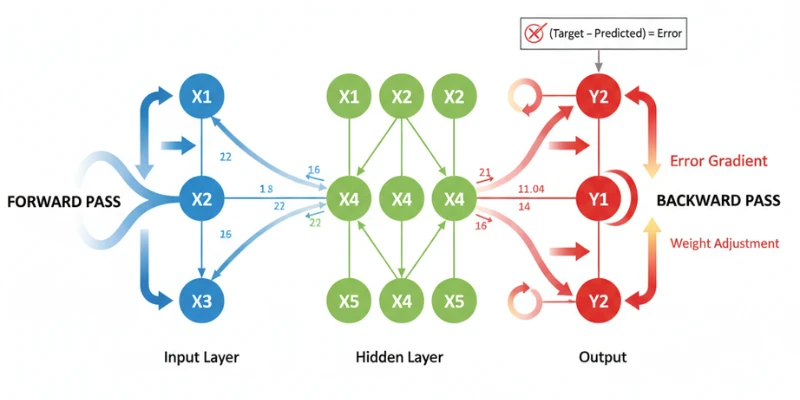

The backpropagation procedure consists of two primary phases: the forward pass and the backward pass.

Forward Pass

During the forward pass, the input data transfers through the network sequentially, layer by layer, until it arrives at the output. The network uses its current weights to make a prediction. At this stage, the system has not learned anything yet. It simply produces an output based on the input data and the initial weights.

Backward Pass

After the output is generated, the network calculates the error by comparing its prediction with the actual result. Then, in the backward pass, backpropagation begins. The algorithm goes back through the network and adjusts the weights of each connection based on how much they contributed to the error.

This process uses a technique called gradient descent, which helps find the optimal values for the weights by minimizing the error step by step. Students enrolled in an Artificial Intelligence Course in Hyderabad often dive deep into this technique to understand how neural networks improve their accuracy over time.

Why Backpropagation Is So Important

Without backpropagation, training deep learning models would be extremely difficult. It enables the network to gain insights from its errors and enhance its performance with every instance. Over time, the model becomes better at recognizing patterns and making accurate predictions.

This algorithm has facilitated significant progress in artificial intelligence, encompassing areas such as image recognition and natural language processing. It forms the backbone of many modern AI systems.

Challenges and Considerations

While backpropagation is powerful, it also comes with challenges. One of the most common issues is the vanishing gradient problem. This occurs when the gradients used to update the weights become too small, slowing down or completely stopping learning in deeper networks.

To address this, researchers have developed various techniques and improvements, such as using different activation functions or optimization methods. These advancements help make backpropagation more efficient and reliable in complex models.

Backpropagation is the learning engine behind neural networks. By adjusting weights through repeated cycles of prediction and correction, it allows AI models to learn from data and improve over time. Although the concept involves mathematical operations, the core idea is intuitive, learning by minimizing error. Gain a deep understanding of backpropagation by enrolling in an Artificial Intelligence Course in Chandigarh and mastering this key concept.

Understanding backpropagation is essential for anyone interested in artificial intelligence or machine learning. It remains one of the most important breakthroughs in the development of intelligent systems.

Also check: Multi-Modal AI: The Future of Models That See, Hear, and Talk